Ladies and Gentlemen, welcome to the main event of the evening. The SQL Undercover Smackdown Heavy Weight Championship of the World!

In the red corner, we hate him, we loath him, I’ve even heard people say he should be deprecated, it’s the Cursor!!!

Facing him in the blue corner, loved on the forums, praised by developers the world over, everyone’s friend, the Loop!!!

Let’s get ready to RUMBLE!!!!

Welcome along to our new series of SQL Smackdowns where we pitch two methods of achieving something against each other to find out which is the most efficient.

Now before I get going I’m going to be the first to put my hand up and admit that any RBAR operation is going to be nasty and we should really be avoiding doing them in favour of a set based approach where possible. But there are plenty of use cases where a set based approach just isn’t possible, we might be doing an admin task where we need to cycle through a list of databases or a particular set of data and perform a task. In those cases we need a way of looping through the data to perform an action.

We all know that cursors are bad and many people will avoid using them at all costs. The forums often advise against their use and I’ve seen plenty of code where they’re replaced with something else. The thing that they’re often replaced with is a WHILE loop.

Every day I come across code where a developer or DBA has used a WHILE loop similar to those in my examples in place of a CURSOR, but are loops really any better than cursors? We decided to find out.

To test this out we created the following table and populated it with 1 million rows.

CREATE TABLE CursorsLoops2 (ID INT, GUID UNIQUEIDENTIFIER)

ROUND 1

In this first test we’ll simply spin through every row in that table and print it out. So let’s start with the cursor.

DECLARE @guid UNIQUEIDENTIFIER DECLARE @id INT DECLARE Test CURSOR FOR SELECT ID, GUID FROM CursorsLoops OPEN Test FETCH NEXT FROM Test INTO @id, @guid WHILE @@FETCH_STATUS = 0 BEGIN PRINT CAST(@id AS VARCHAR(50)) + ' ' + CAST(@guid AS VARCHAR(50)) FETCH NEXT FROM Test INTO @id, @guid END CLOSE test DEALLOCATE test

The query completed in 47 seconds

So let’s see how a WHILE loop get on doing the same thing…

DECLARE @guid UNIQUEIDENTIFIER DECLARE @id INT = 0 SELECT TOP 1 @id = id, @guid = guid FROM CursorsLoops WHERE id > @id ORDER BY id WHILE @id IS NOT NULL BEGIN PRINT CAST(@id AS VARCHAR(50)) + ' ' + CAST(@guid AS VARCHAR(50)) SELECT TOP 1 @id = id, @guid = guid FROM CursorsLoops WHERE id > @id ORDER BY id END

Well, I gave up after the query had run for two minutes and in that time it had managed to get through only 625 rows out of the million.

BOOM! Cursor body slams Loops into the canvas, we felt that from up here.

WOW, that’s a massive win for the cursor but what’s really going on and why was the loop so much slower? Let’s take a look at the execution plan for both.

Cursor Execution Plan

So for every iteration of the cursor we’re having to scan the table, that’s not looking too nice. So what’s the loop doing to make it that much slower?

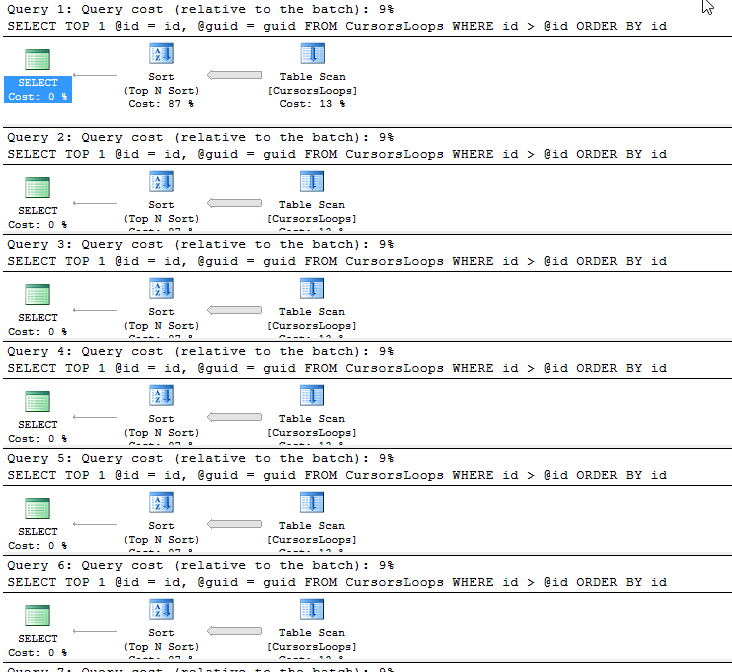

Loop Execution Plan

Well the difference looks to be that sort. Ok, the loop is having to sort the data before it can do anything. Let us level things out a bit and sort the cursor to see what happens then.

Round 2

We’ll change the code of the cursor to order the data first, will that give the loop the edge? At the least it’ll put them on a level footing.

DECLARE @guid UNIQUEIDENTIFIER DECLARE @id INT DECLARE Test CURSOR FOR SELECT ID, GUID FROM CursorsLoops ORDER BY ID OPEN Test FETCH NEXT FROM Test INTO @id, @guid WHILE @@FETCH_STATUS = 0 BEGIN PRINT CAST(@id AS VARCHAR(50)) + ' ' + CAST(@guid AS VARCHAR(50)) FETCH NEXT FROM Test INTO @id, @guid END CLOSE test DEALLOCATE test

This time the cursor took 43 seconds to get through the million rows, that’s marginally quicker than when we were using an unordered cursor. WFT? Let’s have a look at the execution plan now.

This time our cursor is doing something totally different, instead of scanning the dataset on every iteration, it’s running into some data structure and then just performing a seek on that structure each time it performs a fetch.

Check out Aaron Bertrand’s excellent investigation on the performance of the various cursor options.

When we don’t actually specify any cursor options SQL Server will make a decision on what options to use, as we’re seeing here. Just out of interest, what are the read counts looking like for the scripts that we’ve looked at so far.

Loops – 3,776 reads per iteration (over a million iterations that’s 3,776,000,000 reads)

Unordered Cursor – 3 reads per iteration

Ordered Cursor – 3 reads per iteration

Aaron Bertrand tells us that declaring a cursor with LOCAL FAST_FORWARD gives us the best possible performance, let’s try rerunning the unordered cursor with those options specified and see how it performs.

DECLARE @guid UNIQUEIDENTIFIER DECLARE @id INT DECLARE Test CURSOR LOCAL FAST_FORWARD FOR SELECT ID, GUID FROM CursorsLoops ORDER BY ID OPEN Test FETCH NEXT FROM Test INTO @id, @guid WHILE @@FETCH_STATUS = 0 BEGIN PRINT CAST(@id AS VARCHAR(50)) + ' ' + CAST(@guid AS VARCHAR(50)) FETCH NEXT FROM Test INTO @id, @guid END CLOSE test DEALLOCATE test

25 seconds with only 1 read per iteration.

With that huge right hook, Loops goes down. He looks to be out but somehow manages to climb to his feet as bell goes. He staggers to his corner, bloodied.

Round 3

So far we’ve been working on looping through a heap, I wonder if we created a clustered index on ID we’d see a different result.

With the index added, the LOCAL FAST_FORWARD cursor is done in 21 seconds with one read per iteration.

Lets see if the loop can snatch back some pride here.

With the clustered index, the loop was finished in 59 seconds with 3 reads per iteration. Now that’s a huge improvement on what we were seeing for it but it’s still falling way behind the cursor.

Loops is up against the ropes here, one, two swift body shots and he’s on the floor and out for the count.

The Cursor is our SQL Undercover Smackdown Champion!

Conclusion

In the example that I have been looking at, although indexing will increase the performance of a WHILE loop there was no point when it out performed the CURSOR and once we started to use LOCAL FAST_FORWARD cursors, the loop lagged a long way behind.

This is probably going to be quite controversial, it goes against a lot of advice that’s out there and please bear in mind that this is only one specific example, but looking at my findings it might be worth not being quite so quick to shoot cursors down in the future.

Of course you do need to think about your particular use case and if a cursor is the best way to go or if the same could be accomplished using a more efficient set based approach.

Wow, thanks for doing all this work to get data. I remember being advised against cursors once SQL 6.5 came out and finally got rid of them once we had table variables. Hopefully the objectivity will dispel bad dogma.

LikeLike

Table variables, now don’t get me started on those 😉

LikeLike

Something didn’t quite look right so I created a little test harness modelled on yours:

DROP TABLE #CursorsLoops

CREATE TABLE #CursorsLoops

(ID INT IDENTITY(1,1) PRIMARY KEY CLUSTERED,

[GUID] UNIQUEIDENTIFIER)

INSERT INTO #CursorsLoops ([GUID]) SELECT TOP 1000000 NEWID() FROM SYS.COLUMNS A, SYS.COLUMNS B

UPDATE STATISTICS #CursorsLoops WITH FULLSCAN

Then I set up a slightly different loop (yours doesn’t exit, it runs forever):

DECLARE @guid UNIQUEIDENTIFIER

DECLARE @id INT = 0

WHILE @id @id

ORDER BY id

–PRINT CAST(@id AS VARCHAR(50)) + ‘ ‘ + CAST(@guid AS VARCHAR(50))

IF @id = 1000000 BREAK

END

— 00:00:40 with PRINT statement

— 00:00:24 without PRINT statement

Then I set up a cursor loop:

DECLARE @guid UNIQUEIDENTIFIER

DECLARE @id INT = 1

DECLARE Test CURSOR LOCAL FAST_FORWARD FOR

SELECT ID, GUID

FROM #CursorsLoops

OPEN Test

FETCH NEXT FROM Test INTO @id, @guid

WHILE @@FETCH_STATUS = 0

BEGIN

–PRINT CAST(@id AS VARCHAR(50)) + ‘ ‘ + CAST(@guid AS VARCHAR(50))

FETCH NEXT FROM Test INTO @id, @guid

END

CLOSE test

DEALLOCATE test

— 00:00:50 with PRINT statement

— 00:00:26 without PRINT statement

Notice the timings? Some variation between runs, a statistical analysis would probably confirm that the loop and the cursor run in _almost_ the same time frame.

What surprised me is how close they are in terms of performance, given how much work goes on under the covers with the cursor.

LikeLike

Thanks for that, I’ll check my loop code when I’ve got 5 minutes. It was working, although I was tweaking it quite a bit so might have managed to post up a bugged version by mistake.

I think the interesting thing is that cursors certainly aren’t any worse than a loop despite what a lot of people would have you believe.

The only thing that I’d be careful of in your code is that you’re assuming that the table has 1000000 rows and that there are no predicates.

LikeLike

I have done similar tests and gotten similar results. I am curious how using the cursor options “LOCAL STATIC READ_ONLY FORWARD_ONLY” would turn out. I believe (but have not done much research into this) that the reason everyone hates cursors is that they are “slow” and/or “expensive”, and that this is due to cursors, by default, maintaining locks (especially if using a scrollable cursor, which I believe is the default) and needs to re-query to verify rows per fetch (there is a @@FETCH_STATUS for “row not there anymore”). This is why I typically use the “STATIC” option which copies the result set to an internally managed temp table, thus not locking any base tables or needing to re-query across JOINs, etc. But you don’t need STATIC if the cursor is over a temp table already (that just slows it down).

LikeLike

I think a lot of the cursor hate just comes down to a misunderstanding of when they should and when they shouldn’t be used.

I’ll happily admit that cursors are slow and inefficient when compared to a set based approach and they should be avoided in those instances. But there are times when a set based approach just isn’t possible, perhaps you need to perform an admin function on every table in the database. You couldn’t use a set based approach to do that and that’s when a cursor would be the right thing to turn to.

The problem I think is because cursors have such a bad rep that DBAs and developers will go to all sorts of lengths to avoid them and come up with different weird and wonderful alternatives that very often perform no better and usually far worse than the thing that they’re trying to replace.

LikeLiked by 1 person

Another reason they were avoided – and we advised against the – was that people would forget to de-allocate them, or otherwise abuse them. The beauty of looping with a table variable is that it went out of scope. Also, I think there was some “it’s old and unsexy” meme too.

LikeLike

Those are issues with bad coding practices rather than cursors themselves, used inappropriately they can be nasty things.

As far as I know, even if a cursor’s not been deallocated, once it goes out of scope it’s destroyed. That may or may not have been the case in the SQL2000 and earlier days, I don’t remember there being an issue but that doesn’t mean that there wasn’t one. 🙂 I might have to do some investigations to check that out.

LikeLike

Best Intro Ever!! Pay Per View!

LikeLike

It makes sense to me. Cursors are designed to work as part of a process, where everything in a while loop is essentially an independent request.

Just as a convenient and simple example, let us say you are producing a running total on 1 million rows. There is no set based solution. Performing a million independent searches to find and retrieve the million independent rows you need to process is *NOT* the most efficient way to process. Even with a clustered index, you are wasting cycles starting from square one with each of a million SEEK operations, when what you really need is one continuous SCAN processing and making changes as you go. Because of its nature, a cursor can get a lot closer to doing the latter than an ordinary while loop ever could.

As an aside, another terrible way to handle this example would be joining the table to itself with a less than condition and SUM aggregate. It would still be RBAR under the hood–just masked with the join. You would perform exponentially more addition than necessary, but I have seen developers do silly things just like this to avoid the *appearance* of RBAR.

Thank you for taking the time to challenge dogma with data. Much appreciated!

LikeLike

“Just as a convenient and simple example, let us say you are producing a running total on 1 million rows. There is no set based solution. ”

There are IIRC about six different set-based solutions to running totals, most of which have been extensively explored and compared over the years. Here’s the most comprehensive of those articles: http://www.sqlservercentral.com/articles/T-SQL/68467/

LikeLike